Don’t Bring Shiny Rocks to Digital Gunfights

Using Bitcoin to Impose Severe Physical Costs on AI

Badlands Media will always put out our content for free, but you can support us by becoming a paid subscriber to this newsletter. Help our collective of citizen journalists take back the narrative from the MSM. We are the news now.

Shadilay, Pepes! The following is a summary of a mind-bending thought exercise that helped me more deeply consider the interconnected nature of recent technological breakthroughs, the alarming rate of advancement within each of these technologies, and unique hazards that have occurred as a result. The truth is, humanity is not only faced with one revolutionary technological breakthrough (e.g. internet, smart phones), that will dramatically change the way we live, but we are faced with several that are all converging on the same timeframe in history:

Decentralized (e.g. Bitcoin) / centralized (e.g. shitcoins, slavecoins) blockchains, artificial intelligence (AI), virtual reality (VR), 3D printing, big data, the internet of things, advanced/autonomous robotics, etc. are all here regardless of our personal desires and opinions. Right, wrong, or indifferent, these technologies have been around for decades, are advancing at exponential rates, and are already affecting the way we live.

If we are wise, we will dig deep to understand and harness the power of these technologies and mitigate the risks, to enable future generations to advance in knowledge, leverage efficiencies, and increase prosperity. If we are unwise, we will act like we can ignore or escape these technologies, allowing them to either take over autonomously in ways we do not desire, or be used by bad actors to enslave us in a digital prison from which there is no escape.

Each technology mentioned contains its own rabbit hole of data analysis and discussion. In this Substack, I can only hope to scratch the surface, point you to a small subset of pre-existing data/discussion, and provide my own unique perspective. As always, I encourage you to think outside of the box, do your own digging, verify my thought process, and come up with your own conclusions. I am not going to lie; this hazard is strange when you think about it. It’s almost like we are in an episode of THE PEPE ZONE.

To be clear, nature is dangerous and everything in life has risk. I think and speak in these terms out of habit, due to my professional experience as an Aerospace System Safety Engineer. I have spent tens of thousands of hours constantly identifying, considering, prioritizing, and mitigating hazards on some of the most complex systems known to man. This publication is a call to seriously consider hazards occurring because of these technological breakthroughs.

Fortunately, this is not an exercise in doom and gloom! I truly have faith that we can be successful at incrementally harnessing the different aspects of these technological breakthroughs for the betterment of humanity. However, to do this we must be unified, active, and diligent in continually identifying, considering, prioritizing, and mitigating the hazards/risks.

I would argue it is imperative for our survival!

The hazard I want to bring attention to focuses specifically on AI’s increasing ability to produce life-like data (e.g. text, audio, video), doing that at larger and larger scales, and the implications on humanity’s ability to distinguish truth (e.g. what really happened) from AI-generated fantasy. Eventually, these AI data sets will become so massive, so prolific, and so easily accessible/usable, there is a real risk they could overwhelm and obfuscate humanity’s ability to distinguish truth from AI-generated fantasy. More on this later.

The Substack will conclude with a high-level discussion on how Bitcoin can be used as a solution to implement “severe physical costs,” which the AI would not be able to pay, to enable a data filtration mechanism. Again, there is a lot that has been said on various aspects of what I am pulling together for this hazard discussion.

For me, this rabbit hole began while reading, Softwar: A Novel Theory on Power Projection and the National Strategic Significance of Bitcoin. That wasn’t a typo!

[Soft]war is a new form of war doctrine that has been released through the thesis work of a United States Space Force (USSF) Major, Jason P. Lowery. It is different from its predecessor, [Hard]war, in that it is primarily fought on the digital battlefield, instead of the physical battlefield (noting there are physical elements and considerations for the system). Therefore, Softwar’s force projection happens in a non-kinetic manner.

The following figure illustrates the concept.

While we won’t get much deeper on that topic here, it strengthens the argument for the hazard mitigation presented later (i.e. we should be using and building on top of Bitcoin anyways). I do believe it is important to understand we have a USSF Major laying out a solid and easy to understand thesis on how Bitcoin is a national strategic priority as a [weapon of peace] against all enemies (think AI) foreign and domestic. I encourage you to pick up a copy!

While studying this topic and following various Twitter accounts to glean insight into any additional data, discussions, reactions that would come because of the thesis, I was inadvertently brought deeper down the AI rabbit hole. Before falling further, I had a fair understanding of the general ideas of AI, and potential use cases, through professional experience and other research. Ultimately, there is only so much you can focus on at any one point, and AI wasn’t high on my priority list at the time. I was channeling my efforts to other areas of importance, of which there are many (e.g. the interconnections of Q / Trump / Bitcoin / Pepe that GMONEY and I discuss on Rugpull Radio). Therefore, I hadn’t given recent advancements, or the rate of change in the field of AI, very much critical thinking. Note, the Softwar rabbit hole coincided with my reading of Jeff Booth’s book, The Price of Tomorrow.

In his book, Booth goes into greater detail into the technological aspects of the various topics I mentioned and discusses other hazards that are on the horizon (e.g. deflation) that we must control:

“Most people are generally aware of Moore’s law,” which observes “that the number of transistors on a printed circuit board” “continue [to double] every 2 years,” which produces a “doubling of computing power.”

This means that the rate of change of these technologies works exponentially, with smaller scale effects in the beginning, and larger scale effects in the end. Each technology is advancing on its own unique timeline but there are many interrelations between the different technologies. The effects of each technological advancement can be felt throughout various areas of life.

Think about the last ~30 years and how much faster technology has advanced with each passing year and how much different your life has become as a result. News flash, the trend will only continue, but now we are in the later stages of advancement, where the effects become dramatically more noticeable, and new technologies have emerged / matured to further add to the chaos.

Specific to AI, which feeds into where I will take this, Booth says, “the explosion in knowledge and positive feedback loop from learning is accelerating to the point that we are finding it hard to keep up with the changes.”

Another way to think of it, you can get up to speed now by choice and learn how you can use the technology, or you will be forced to get up to speed later by necessity and learn at the same time you are way behind the learning curve. As we have learned from many other technologies over the course of human history, there is no stopping them.

Recently, there was a bunch of hype coming from virtually every information circle and source, at some level, about the OpenAi’s ChatGPT AI model.

“We’ve trained a model called ChatGPT which interacts in a conversational way. The dialogue format makes it possible for ChatGPT to answer followup questions, admit its mistakes, challenge incorrect premises, and reject inappropriate requests” (Source).

The news about the platform was followed by many examples of people using ChatGPT to solicit AI generated response, studying its behavior, determining potential hazards and use cases, and a variety of other endeavors. During an exchange with Major Jason Lowery on Twitter, Jason sent me a Tweet containing a large amount of text (see below). I read the Tweet like I would any other Tweet, not immediately considering that it wasn’t Jason behind the keyboard, but instead an AI output.

A few minutes later, Jason posted the source of the text on another thread, which was illustrated as a screenshot of the ChatGPT 4.0 output that he generated. I was further intrigued, as I fleshed out broader thought processes between Softwar and AI, while reflecting on how good the AI solution looked in place of an expected human response.

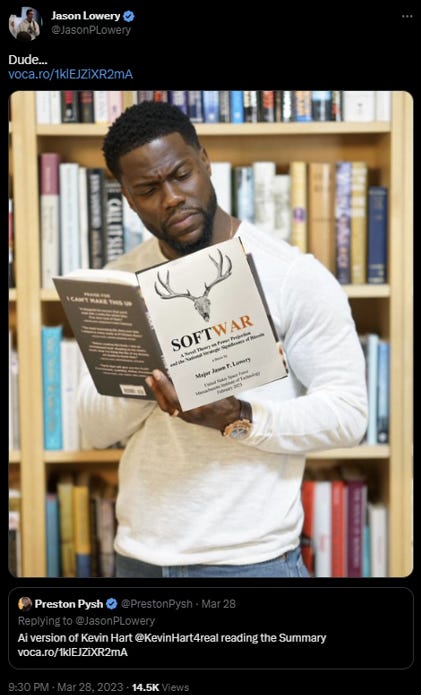

An hour later, Preston Pysh replied to Jason with a different Chat GPT output, which re-wrote Jason’s Chat GPT summary in the style of Kevin Hart (see below). Up to this point, I had seen some very compelling outputs from various AI tools that would draw, speak, write, etc. with stunning accuracy when compared against real life. However, the more frequent occurrence of this topic in conversation was what continued to stick out in my mind.

Later, Preston followed that Tweet by posting another which contained a link to a recording platform that has a video clip containing a output of a different AI tool (voice,) where the output is Kevin Hart providing the same summary in his words and using his style.

This is a must listen to fully appreciate what I will say in the next parts!

When I listened to that I was truly mind blown at how well it was done, and I don’t mean how good the voice is (which is impressive). What I am more poking at, is that literally [no one] wrote that summary, and literally [no one] said those words! It was all a series of inputs and outputs from different AI tools. It is important to be clear here, for the sake of argument, the real Kevin Hart didn’t say those words. Those weren’t his feelings, emotions, or thoughts, but they were mimicked extremely well. After listening to the clip a few times, my mind was racing as I better understood how far / quickly things had progressed, considered the potential benefits and risks that were now apparent, interconnected the data with my other research, and updated my mental models.

Major Jason Lowery highlighted this data by sharing it and adding a meme of Kevin Hart reading the Softwar book (see below – obviously photoshopped).

Reflecting on everything I have mentioned so far, and other data I have not mentioned (e.g. Saylor, Dorsey, Musk’s thoughts on AI / bots), a system level hazard became apparent. AI’s increasing ability to produce life-like data (e.g. text, audio, video), and doing that at larger and larger scales, could have severely negative implications on humanity’s ability to distinguish truth (e.g. what really happened) from AI-generated fantasy. At some point, it could even be hard to distinguish between what gets generated from human inputs and what gets generated from AI inputs. Worst case, it would be a false reality [no one] created that [no one] weaponized against us.

The danger is that, over time these AI data sets will become so massive, so prolific, and so easily accessible / usable (by both humans and AI), they would overwhelm and obfuscate humanity’s ability to distinguish truth from AI-generated fantasy.

The concern here isn’t that digital forensics won’t be available to ultimately distinguish / prove the data for those who are doing their homework. The concern here is that without a clearly explicit and easy to understand / execute process, humanity is more likely to capitulate to the sea of infinite opportunity of convenience and fantasy. To illustrate my point, quickly consider how many people are [still] fooled by basic human generated scams / manipulations like the Prince of Nigeria and bad photoshops.

“The “Nigerian prince” email scam is perhaps one of the longest-running Internet frauds.” “Americans lost $703,000 last year to these types of frauds” (Source).

Again, as AI gets better (and seems is good enough now), it will be possible to quickly generate endless text, clips, images, and recordings, of whoever, doing / saying whatever, regardless of their knowledge or approval of the activity. Where it becomes tricky, is these digital renditions will be indistinguishable from reality in enough ways and will provide the ability to fool anyone who doesn’t identify / consider the source (e.g. from Kevin Hart or not, real or not real). I consider this data to be uniquely hazardous, recognizing the nature of 5th Generation Warfare, considering how AI could be used in psychological operations to generate desired behaviors / effects from a target audience, and how AI has already been used in increasingly complex ways over the decades (for better and worse). Ultimately, it does not appear we have a choice in the matter regarding whether this hazard will exist, as there is evidence it is already here. Therefore, we must dig deep to understand and harness the power of these technologies and mitigate the known risks. Left unmanaged, it would be devastating for humanity.

While current efforts to fool the population seem to originate from human inputs caused by bad incentives, we must recognize that some subset of the AI suite will eventually learn, and probabilistically use, these same methodologies anyways. Therefore, as Jason indicates (see re-Tweet below), the key will be to have something that the AI does not have—specifically a mechanism that can be used to impose “severe physical costs” against these AI bot farms.

Putting that another way, we would need access to a valuable resource the AI wouldn’t be able to replicate, easily acquire, and use for various purposes (at least at scale). To ensure we have this mechanism when we need it, we must take the hazards seriously and begin implementing the solutions now. Keeping in mind, the point of need may already be in the past. How far in the past? Well, take a look at the video below which highlights Blackrock’s Aladdin tool. You should quickly see how long AI has been in the works and how much control some AI tools have already amassed.

Fortunately, while we may not be able to stop the existence of these AI-generated digital renditions as we move forward, we already have a tool that can be used to impose “severe physical costs” against the AI data / bots that exist already. Again, different ideas on hazards and mitigations involving all these technological breakthroughs exist.

The simple idea is Bitcoin can be used to associate bits of data with an energy cost (a micro transaction), creating an auditable record of a real-world physical cost (energy transfer), to simply provide a clear and immutable record of all data that has “paid the cost” (right, wrong, or indifferent).

While this process wouldn’t automatically guarantee the remaining data set actually has any real value (e.g. truth), it does guarantee that a scarce resource was used to “provide access” into the database. These energy costs would be trivial for humans to pay, but impossible for AI to replicate at scale. The “severe physical cost” is a necessary mitigation to ensure we do not lose ourselves in the machine.

As just one illustration of how this could work, here is an asset I created on Bitcoin, Layer 2, Counter Party Protocol. Click the card to see the animation and hear the audio track. This is proof we can already use Bitcoin to mitigate this hazard. We can verify that a price was paid to store this data on Bitcoin. We can verify when it was done, which wallet performed the action, among other information.

The concept of using Bitcoin to defeat bot farms isn’t new or exclusive to Softwar, with others such as Michael Saylor, Jack Dorsey and Elon Musk describing different scenarios. The goal of this publication was to help provide a unique perspective on this bigger picture for those who may be unaware of the technological shifts that are occurring constantly all around us and the potential implications.

To summarize in a different way, Bitcoin can be used to filter out the noise of AI-generated data in clever ways, requiring a “price to be paid” to help uniquely identify the communication from other AI data that might otherwise be indistinguishable from reality.

General Flynn put out a comparison of AI and Nuclear Warfare. The piece describes how AI is already pointed at us in the area of 5th Generation Warfare:

“Foreign-born AI-driven psychological programming has emerged as a formidable weapon in the arsenal of fifth-generation warfare. It wreaks havoc on the social, political and psychological fabric of our nation. Although the methods of attack differ, the devastation caused by foreign-born AI-driven psychological programming causes lasting damage to a nation’s core.” (Source).

The data provides yet another compelling reason why Bitcoin is way more important than we imagine, as it provides a way to impose “severe physical costs” against the AI! While these micro transactions would be insignificant to real humans, it would be cost prohibitive to pay the price on an infinite set of AI data. Implementing these types of solutions may be the only way our future selves can distinguish between what is real and what is not. Please, do not give your Bitcoin to AI. Kek.

Shadilay!

Badlands Media articles and features represent the opinions of the contributing authors and do not necessarily represent the views of Badlands Media itself.

If you enjoyed this contribution to Badlands Media, please consider checking out more of my work for free at Patriots in Progress.

I've been following AI and automation for several years now as it intersects with the WEF's agenda on multiple levels. Its only now that the herd of Normies are getting a glimpse of what it can do, and this literally is just the beginning of a economic paradigm shift. This is far more profound than the steam age industrial revolution, or the advent of electricity in the average home.

The assumption was AI would replace tedious, low skill labor, but its actually replacing white collar labor more aggressively. Those layoffs you saw from several Big Tech companies are going to rapidly escalate as Copilot and Google Workspace compete to bring the most productivity enhancing features to their platforms. There's a reason why program developers are popping sedatives like skittles.

Walmart announced just earlier in the week they were going to replace up to 65% of their labor force with automation, catching up and surpassing what Amazon is doing. The other chains either follow suit, or get left behind. These giants employ the unskilled, Walmart is particularly aggregious in their exploitation of employees and low wages/benefits. Now those laid off will have nothing and no where to go.

Industries such as pharmacies, health care, education, federal, state and local gov't, financial, these all comprise the majority of the US Job market. They won't be eliminated, but they will need a heck of a lot less people and no, the jobs lost will not "easily" be replaced with new jobs servicing the bots or AI.

What people don't grasp is how AI develops, its not linear, its not even doubling like Moore's Law of computing power is. Its **logarithmic**. And more importantly, self-correcting as its part of a massive network, each node learning a new thing or a old thing done in a new way.

The job displacement will be staggering. And don't think that UBI and CBDCs don't factor into this. UBI will be just enough to keep the masses obedient as all their needs will be paid with a CBDC that will have programmed controls such as what/where/whom you can buy from and expiration dates.

The thing to note is that this revolution, holds a tremendous promise for humanity. Provided it is in the hands of those who love humanity. That is unlikely to be the case. Whoever wins the AI race, controls the world and so far, that's the psychopaths calling themselves our community positive corporations and their political minions. We're already on the receiving end of the early stages of this stuff as mentioned in the article quoting General Flynn.

So yes, Bitcoin, gold, silver, and lead. And also, if possible, homesteading, preferably with like minded to form a community. The best, and most effective way to not need UBI and their CBDCs is to be as self sufficient as possible.

I wholeheartedly agree with the notion that we must strive to understand and harness the power of these technologies to ensure a prosperous future for generations to come. Being proactive and adaptive is crucial in order to mitigate potential risks and maximize the benefits. Ignorance or resistance, on the other hand, will only lead to unintended consequences and potential misuse by nefarious actors.

The author's approach to exploring each technology's complexities and potential impacts is commendable. Encouraging readers to think critically, do their own research, and draw their own conclusions is essential to fostering a well-informed society. This collective effort is vital in navigating the multifaceted challenges posed by these transformative technologies.